揭秘多年珍藏的PostgreSQL.conf参数优化模板

发表时间: 2019-08-08 00:01

最近从github上看到的PG11参数模板,因为用的PG10比较多,所以我调整为PG10的一个参数模板了。

分享一下大佬博客:

https://github.com/digoal/blog

脚本中的端口、数据文件等位置需自定义修改。

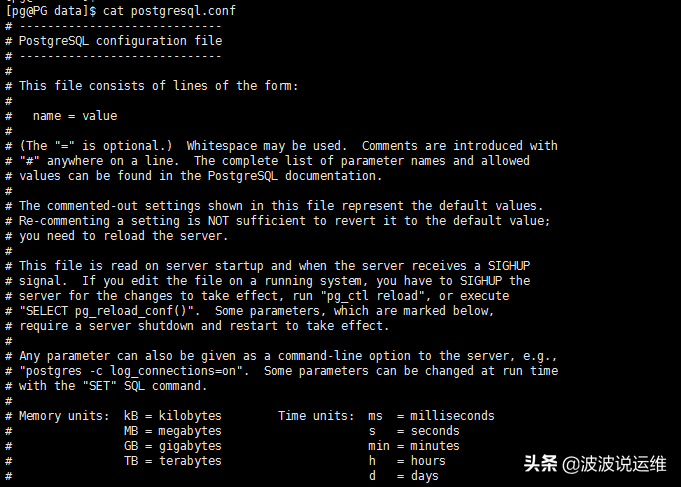

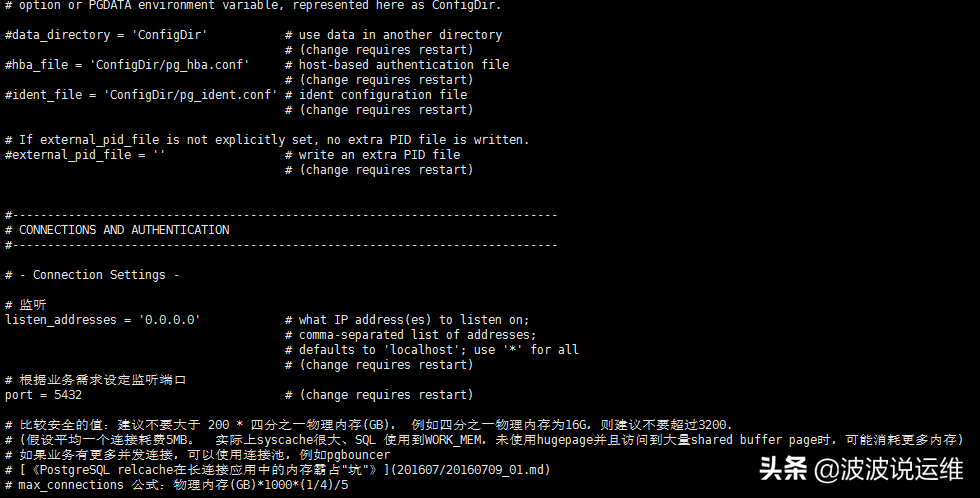

# ----------------------------- # PostgreSQL configuration file # - Connection Settings - # 监听 listen_addresses = '0.0.0.0' # what IP address(es) to listen on; # comma-separated list of addresses; # defaults to 'localhost'; use '*' for all # (change requires restart) # 根据业务需求设定监听端口 port = 5432 # (change requires restart) # 比较安全的值:建议不要大于 200 * 四分之一物理内存(GB), 例如四分之一物理内存为16G,则建议不要超过3200. # (假设平均一个连接耗费5MB。 实际上syscache很大、SQL 使用到WORK_MEM,未使用hugepage并且访问到大量shared buffer page时,可能消耗更多内存) # 如果业务有更多并发连接,可以使用连接池,例如pgbouncer # [《PostgreSQL relcache在长连接应用中的内存霸占"坑"》](201607/20160709_01.md) # max_connections 公式:物理内存(GB)*1000*(1/4)/5 max_connections = 200 # (change requires restart) # 为超级用户保留多少个连接 superuser_reserved_connections = 10 # (change requires restart) # $PGDATA, /tmp中 创建unix socket监听 unix_socket_directories = '., /tmp' # comma-separated list of directories # (change requires restart) #unix_socket_group = '' # (change requires restart) # 除了OWNER和超级用户,其他用户无法从/tmp unix socket连接该实例 unix_socket_permissions = 0700 # begin with 0 to use octal notation # (change requires restart) #bonjour = off # advertise server via Bonjour # (change requires restart) #bonjour_name = '' # defaults to the computer name # (change requires restart) # - TCP Keepalives - # see "man 7 tcp" for details # 如果你连接数据库空闲一段时间会端口,可能是网络中存在会话超时的设备,建议可以设置一下这个心跳时间,TCP心跳间隔会缩短到60秒。 tcp_keepalives_idle = 60 # TCP_KEEPIDLE, in seconds; # 0 selects the system default tcp_keepalives_interval = 10 # TCP_KEEPINTVL, in seconds; # 0 selects the system default tcp_keepalives_count = 10 # TCP_KEEPCNT; # 0 selects the system default # - Authentication - #authentication_timeout = 1min # 1s-600s # md5 or scram-sha-256 # 如果用户密码的MD5会泄露,建议使用scram-sha-256,但是相互不兼容,请注意。 password_encryption = md5 # md5 or scram-sha-256 #db_user_namespace = off # GSSAPI using Kerberos #krb_server_keyfile = '' #krb_caseins_users = off #------------------------------------------------------------------------------ # RESOURCE USAGE (except WAL) #------------------------------------------------------------------------------ # - Memory - # 1/4 主机内存 # shared_buffers 公式: IF use hugepage: 主机内存*(1/4) ELSE: min(32GB, 主机内存*(1/4)) shared_buffers = 1GB # min 128kB # 建议shared buffer设置超过32GB时 使用大页,页大小 /proc/meminfo Hugepagesize huge_pages = try # on, off, or try # (change requires restart) #temp_buffers = 8MB # min 800kB # 如果用户需要使用两阶段提交,需要设置为大于0,建议与max_connections一样大 # max_prepared_transactions 公式: max_prepared_transactions=max_connections max_prepared_transactions = 200 # zero disables the feature # (change requires restart) # Caution: it is not advisable to set max_prepared_transactions nonzero unless # you actively intend to use prepared transactions. # 可以在会话中设置,如果有大量JOIN,聚合操作,并且期望使用hash agg或hash join。 (排序,HASH都会用到work_mem) # 可以设大一些,但是不建议大于 四分之一内存除以最大连接数 . # (一条QUERY中可以使用多倍WORK_MEM,与执行计划中的NODE有关) # work_mem 公式: max(min(物理内存/4096, 64MB), 4MB) work_mem = 8MB # min 64kB # 创建索引时使用的内存空间。 # maintenance_work_mem 公式: min( 8G, (主机内存*1/8)/max_parallel_maintenance_workers ) maintenance_work_mem = 2GB # min 1MB # 在对一张表进行垃圾回收时,用于缓存垃圾版本的ctid, # 如果autovacuum work mem填满了,则VACUUM需要进入索引垃圾回收阶段,扫描索引(因此为了避免索引被多次扫描,需要缓存住一张表的所有垃圾TUPLE的CTID)。 # 一张表(细到单个最底层分区)垃圾回收结束后释放。 # autovacuum_work_mem 公式: min( 8G, (主机内存*1/8)/autovacuum_max_workers ) autovacuum_work_mem = 1GB #max_stack_depth = 2MB # min 100kB dynamic_shared_memory_type = posix # the default is the first option # - Disk - # 如果需要限制临时文件使用量,可以设置。 # 例如, 防止有异常的递归调用,无限使用临时文件。 #temp_file_limit = -1 # limits per-process temp file space # in kB, or -1 for no limit # - Kernel Resources - ## 如果你的数据库有非常多小文件(比如有几十万以上的表,还有索引等,并且每张表都会被访问到时), # 建议FD可以设多一些,避免进程需要打开关闭文件。 ## 但是不要大于前面章节系统设置的ulimit -n(open files) # max_files_per_process=655360 #max_files_per_process = 1000 # min 25 # (change requires restart) # - Cost-Based Vacuum Delay - # 如果你的系统IO非常好,则可以关闭vacuum delay , 避免因为垃圾回收任务周期长导致的膨胀。 vacuum_cost_delay = 0 # 0-100 milliseconds #vacuum_cost_page_hit = 1 # 0-10000 credits #vacuum_cost_page_miss = 10 # 0-10000 credits #vacuum_cost_page_dirty = 20 # 0-10000 credits # io很好,CPU核数很多的机器,设大一些。如果设置了vacuum_cost_delay = 0 ,则这个不需要配置 vacuum_cost_limit = 10000 # 1-10000 credits # - Background Writer - bgwriter_delay = 10ms # 10-10000ms between rounds bgwriter_lru_maxpages = 1000 # max buffers written/round, 0 disables bgwriter_lru_multiplier = 10.0 # 0-10.0 multiplier on buffers scanned/round bgwriter_flush_after = 512kB # measured in pages, 0 disables # - Asynchronous Behavior - effective_io_concurrency = 0 # 1-1000; 0 disables prefetching # wal sender, user 动态fork的process, parallel worker等都算作 worker process, 所以你需要设置足够大. max_worker_processes = 256 # (change requires restart) # 如果需要使用并行创建索引,设置为大于1 ,不建议超过 主机cores-4 # 这个参数pg10是不支持的# max_parallel_maintenance_workers 公式: min( max(2, CPU核数/2) , 16 ) # max_parallel_maintenance_workers = 6 # taken from max_parallel_workers # 如果需要使用并行查询,设置为大于1 ,不建议超过 主机cores-4 # max_parallel_workers_per_gather 公式: min( max(2, CPU核数-4) , 24 ) max_parallel_workers_per_gather = 0 # taken from max_parallel_workers # leader 是否与work process一起参与并行计算,如果ON,则并行度会默认+1。 # 这个参数pg10 不支持# parallel_leader_participation = on # 如果需要使用并行查询,设置为大于1 ,不建议超过 主机cores-2 # 必须小于 max_worker_processes # max_parallel_workers 公式: max(2, CPU核数-4) max_parallel_workers = 32 # maximum number of max_worker_processes that # can be used in parallel operations # 是否启用snapshot too old技术,避免长事务导致的膨胀 # 会导致性能一定的下降,约8% old_snapshot_threshold = 6h # 1min-60d; -1 disables; 0 is immediate # (change requires restart) #backend_flush_after = 256 # measured in pages, 0 disables #------------------------------------------------------------------------------ # WRITE-AHEAD LOG #------------------------------------------------------------------------------ # - Settings - # 需要流复制物理备库、归档、时间点恢复时,设置为replica,需要逻辑订阅或逻辑备库则设置为logical wal_level = replica # minimal, replica, or logical # (change requires restart) #fsync = on # flush data to disk for crash safety # (turning this off can cause # unrecoverable data corruption) # 如果双节点,设置为ON,如果是多副本,同步模式,建议设置为remote_write。 # 如果磁盘性能很差,并且是OLTP业务。可以考虑设置为off降低COMMIT的RT,提高吞吐(设置为OFF时,可能丢失部分XLOG RECORD) synchronous_commit = off # synchronization level; # off, local, remote_write, remote_apply, or on # 建议使用pg_test_fsync测试后,决定用哪个最快。通常LINUX下open_datasync比较快。 #wal_sync_method = fsync # the default is the first option # supported by the operating system: # open_datasync # fdatasync (default on Linux) # fsync # fsync_writethrough # open_sync # 如果文件系统支持COW例如ZFS,则建议设置为OFF。 # 如果文件系统可以保证datafile block size的原子写,在文件系统与IO系统对齐后也可以设置为OFF。 # 如果底层存储能保证IO的原子写,也可以设置为OFF。 full_page_writes = on # recover from partial page writes # 当写FULL PAGE WRITE的io是瓶颈时建议开启 wal_compression = on # enable compression of full-page writes # 如果要使用pg_rewind,flashback 时间线,需要打开这个功能 # [《PostgreSQL pg_rewind,时间线修复,脑裂修复,flashback - 从库开启读写后,回退为只读从库。异步主从发生角色切换后,主库rewind为新主库的从库》](201901/20190128_02.md) #wal_log_hints = off # also do full page writes of non-critical updates # (change requires restart) # 建议 min( WAL segment size(默认16MB) , shared_buffers/32 ) wal_buffers = 16MB # min 32kB, -1 sets based on shared_buffers # (change requires restart) # 如果设置了synchronous_commit = off,建议设置wal_writer_delay wal_writer_delay = 10ms # 1-10000 milliseconds wal_writer_flush_after = 1MB # measured in pages, 0 disables # 如果synchronous_commit=on, 并且已知业务系统为高并发,对数据库有写操作的小事务,则可以设置commit_delay来实现分组提交,合并WAL FSYNCIO 。 # 分组提交 #commit_delay = 10 # range 0-100000, in microseconds # 同时处于提交状态的事务数超过commit_siblings时,使用分组提交 #commit_siblings = 5 # range 1-1000 # - Checkpoints - # 不建议频繁做检查点,否则XLOG会产生很多的FULL PAGE WRITE(when full_page_writes=on)。 checkpoint_timeout = 30min # range 30s-1d # 建议等于SHARED BUFFER,或2倍。 # 同时需要考虑崩溃恢复时间, 越大,检查点可能拉越长导致崩溃恢复耗时越长。但是越小,开启FPW时,WAL日志写入量又越大。 建议采用COW文件系统,关闭FPW。 # max_wal_size 公式: # min(shared_buffers*2 , 用户存储空间/10) max_wal_size = 48GB # 建议是SHARED BUFFER的2分之一 # min_wal_size 公式: # min(shared_buffers/2 , 用户存储空间/10) min_wal_size = 12GB # 硬盘好(nvme ssd)的情况下,值越小可以让检查点快速结束,恢复时也可以快速达到一致状态。否则建议0.5~0.9 # 如果有hot standby作为HA节点,这个值也可以设置为0.5~0.9 避免写高峰时CHECKPOINT对写带来的冲击。 checkpoint_completion_target = 0.2 # checkpoint target duration, 0.0 - 1.0 # IO很好的机器,不需要考虑平滑调度, 否则建议128~256kB checkpoint_flush_after = 256kB # measured in pages, 0 disables #checkpoint_flush_after = 0 # measured in pages, 0 disables #checkpoint_warning = 30s # 0 disables # - Archiving - # 建议默认打开,因为修改它需要重启实例 # 打开后,一个WAL文件写满后,会在pg_wal/archive_status目录中创建xxxxxx.ready的文件,归档命令archive_command正常结束后,会清除这个状态文件。 archive_mode = on # enables archiving; off, on, or always # (change requires restart) # 后期再修改,如 'test ! -f /disk1/digoal/arch/%f && cp %p /disk1/digoal/arch/%f' #archive_command = '/bin/date' # command to use to archive a logfile segment # placeholders: %p = path of file to archive # %f = file name only # e.g. 'test ! -f /mnt/server/archivedir/%f && cp %p /mnt/server/archivedir/%f' #archive_timeout = 0 # force a logfile segment switch after this # number of seconds; 0 disables #------------------------------------------------------------------------------ # REPLICATION #------------------------------------------------------------------------------ # - Sending Servers - # Set these on the master and on any standby that will send replication data. # 同时允许几个流复制协议的连接,根据实际需求设定 ,可以设置一个默认值例如64 max_wal_senders = 64 # max number of walsender processes # 根据实际情况设置保留WAL的数量,主要是防止过早的清除WAL,导致备库因为主库的WAL清除而中断。根据实际情况设定。 #wal_keep_segments = 0 # in logfile segments; 0 disables #wal_sender_timeout = 60s # in milliseconds; 0 disables # 根据实际情况设置需要创建多少replication slot # 使用slot,可以保证流复制下游没有接收的WAL会在当前节点永久保留。所以必须留意下游的接收情况,否则可能导致WAL爆仓 # 建议大于等于max_wal_senders # max_replication_slots 公式: max_replication_slots=max_wal_senders max_replication_slots = 64 # max number of replication slots # (change requires restart) #track_commit_timestamp = off # collect timestamp of transaction commit # (change requires restart) # - Master Server - # These settings are ignored on a standby server. # 如果有2个或2个以上的备库,可以考虑使用同步多副本模式。 根据实际情况设置 #synchronous_standby_names = '' # standby servers that provide sync rep # method to choose sync standbys, number of sync standbys, # and comma-separated list of application_name # from standby(s); '*' = all # 注意,当数据库有大量的更新、删除操作时,设置这个值容易导致表膨胀,容易导致VACUUM进程空转,导致IO和CPU飙升。(特别是autovacuum naptime配置很小时) #vacuum_defer_cleanup_age = 0 # number of xacts by which cleanup is delayed # - Standby Servers - # These settings are ignored on a master server. hot_standby = on # "off" disallows queries during recovery # (change requires restart) # 当standby的archive replay与standby的用户SQL请求发生冲突时,在打断SQL前,允许用户SQL最长的执行时间. 打断SQL后,需要等STANDBY APPLY所有archive restore command 的WAL文件,APPLY才允许被继续打断。 max_standby_archive_delay = 120s # max delay before canceling queries # when reading WAL from archive; # -1 allows indefinite delay max_standby_streaming_delay = 120s # max delay before canceling queries # when reading streaming WAL; # -1 allows indefinite delay wal_receiver_status_interval = 1s # send replies at least this often # 0 disables # 建议关闭,以免备库长事务导致 主库无法回收垃圾而膨胀。 hot_standby_feedback = off # send info from standby to prevent # query conflicts #wal_receiver_timeout = 60s # time that receiver waits for # communication from master # in milliseconds; 0 disables #wal_retrieve_retry_interval = 5s # time to wait before retrying to # retrieve WAL after a failed attempt # - Subscribers - # 逻辑复制订阅端节点设置: # These settings are ignored on a publisher. # [《PostgreSQL 10.0 preview 逻辑订阅 - 原理与最佳实践》](201702/20170227_01.md) # These settings are ignored on a publisher. # 必须小于 max_worker_processes # 包括 apply workers and table synchronization workers # max_logical_replication_workers 公式: max_logical_replication_workers=max_wal_senders max_logical_replication_workers = 64 # taken from max_worker_processes # (change requires restart) # Maximum number of synchronization workers per subscription. This parameter controls the amount of parallelism of the initial data copy during the subscription initialization or when new tables are added. # max_sync_workers_per_subscription 公式: min ( 32 , max(2, CPU核数-4) ) max_sync_workers_per_subscription = 8 # taken from max_logical_replication_workers #------------------------------------------------------------------------------ # QUERY TUNING #------------------------------------------------------------------------------ # - Planner Cost Constants - #seq_page_cost = 1.0 # measured on an arbitrary scale # 离散IO很好的机器(例如ssd, nvme ssd),不需要考虑离散和顺序扫描的成本差异 random_page_cost = 1.1 # same scale as above #cpu_tuple_cost = 0.01 # same scale as above #cpu_index_tuple_cost = 0.005 # same scale as above #cpu_operator_cost = 0.0025 # same scale as above #parallel_tuple_cost = 0.1 # same scale as above #parallel_setup_cost = 1000.0 # same scale as above #jit_above_cost = 100000 # perform JIT compilation if available # and query more expensive, -1 disables #jit_optimize_above_cost = 500000 # optimize JITed functions if query is # more expensive, -1 disables #jit_inline_above_cost = 500000 # attempt to inline operators and # functions if query is more expensive, # -1 disables #min_parallel_table_scan_size = 8MB #min_parallel_index_scan_size = 512kB # 扣掉会话连接RSS,shared buffer, autovacuum worker, 剩下的都是OS可用的CACHE。 # effective_cache_size 公式: 主机内存*0.75 effective_cache_size = 80GB # - Genetic Query Optimizer - #geqo = on #geqo_threshold = 12 #geqo_effort = 5 # range 1-10 #geqo_pool_size = 0 # selects default based on effort #geqo_generations = 0 # selects default based on effort #geqo_selection_bias = 2.0 # range 1.5-2.0 #geqo_seed = 0.0 # range 0.0-1.0 # - Other Planner Options - #default_statistics_target = 100 # range 1-10000 #constraint_exclusion = partition # on, off, or partition #cursor_tuple_fraction = 0.1 # range 0.0-1.0 #from_collapse_limit = 8 #join_collapse_limit = 8 # 1 disables collapsing of explicit # JOIN clauses #force_parallel_mode = off #------------------------------------------------------------------------------ # REPORTING AND LOGGING #------------------------------------------------------------------------------ # - Where to Log - log_destination = 'csvlog' # Valid values are combinations of # stderr, csvlog, syslog, and eventlog, # depending on platform. csvlog # requires logging_collector to be on. # This is used when logging to stderr: logging_collector = on # Enable capturing of stderr and csvlog # into log files. Required to be on for # csvlogs. # (change requires restart) # These are only used if logging_collector is on: log_directory = 'log' # directory where log files are written, # can be absolute or relative to PGDATA # 时间格式 http://pubs.opengroup.org/onlinepubs/009695399/functions/strftime.html # 设置参考 https://www.postgresql.org/docs/11/runtime-config-logging.html#RUNTIME-CONFIG-LOGGING-WHERE # 日志保留一天,每个小时一个文件取决于log_rotation_age 每小时切换一下log_filename = 'postgresql-%H.log' # log file name pattern, # can include strftime() escapes # 日志保留一个月例子,每天一个文件取决于log_rotation_age 每天切换一下 # log_filename = 'postgresql-%d.log' # log file name pattern, # can include strftime() escapes # 日志保留一周例子,每天一个文件取决于log_rotation_age 每天切换一下 # log_filename = 'postgresql-%a.log' # log file name pattern, # can include strftime() escapes #log_file_mode = 0600 # creation mode for log files, # begin with 0 to use octal notation log_truncate_on_rotation = on # If on, an existing log file with the # same name as the new log file will be # truncated rather than appended to. # But such truncation only occurs on # time-driven rotation, not on restarts # or size-driven rotation. Default is # off, meaning append to existing files # in all cases. # 配合log_filename设置,建议与%后面的名字周期一致log_rotation_age = 1h # Automatic rotation of logfiles will # happen after that time. 0 disables. # 单个日志文件最大多大时,被截断,可以设置一个上限防止日志无限制增长 log_rotation_size = 1GB # log_rotation_size = 0 # Automatic rotation of logfiles will # happen after that much log output. # 0 disables. # These are relevant when logging to syslog: #syslog_facility = 'LOCAL0' #syslog_ident = 'postgres' #syslog_sequence_numbers = on #syslog_split_messages = on # This is only relevant when logging to eventlog (win32): # (change requires restart) #event_source = 'PostgreSQL' # - When to Log - #client_min_messages = notice # values in order of decreasing detail: # debug5 # debug4 # debug3 # debug2 # debug1 # log # notice # warning # error #log_min_messages = warning # values in order of decreasing detail: # debug5 # debug4 # debug3 # debug2 # debug1 # info # notice # warning # error # log # fatal # panic #log_min_error_statement = error # values in order of decreasing detail: # debug5 # debug4 # debug3 # debug2 # debug1 # info # notice # warning # error # log # fatal # panic (effectively off) # 根据实际情况设定,例如业务上认为5秒以上是慢SQL,那么就设置为5秒。 log_min_duration_statement = 5s # -1 is disabled, 0 logs all statements # and their durations, > 0 logs only # statements running at least this number # of milliseconds # - What to Log - #debug_print_parse = off #debug_print_rewritten = off #debug_print_plan = off #debug_pretty_print = on # 记录检查点的详细统计信息 log_checkpoints = on # 如果业务是短连接,建议设置为OFF,否则建议设置为ON log_connections = off # 如果业务是短连接,建议设置为OFF,否则建议设置为ON log_disconnections = off #log_duration = off # 记录错误代码的代码位置,是什么代码输出的日志,更好的跟踪问题 log_error_verbosity = verbose # terse, default, or verbose messages #log_hostname = off #log_line_prefix = '%m [%p] ' # special values: # %a = application name # %u = user name # %d = database name # %r = remote host and port # %h = remote host # %p = process ID # %t = timestamp without milliseconds # %m = timestamp with milliseconds # %n = timestamp with milliseconds (as a Unix epoch) # %i = command tag # %e = SQL state # %c = session ID # %l = session line number # %s = session start timestamp # %v = virtual transaction ID # %x = transaction ID (0 if none) # %q = stop here in non-session # processes # %% = '%' # e.g. '<%u%%%d> ' # 是否打印锁等待事件 log_lock_waits = on # log lock waits >= deadlock_timeout # 如果需要审计SQL,则可以设置为all log_statement = 'ddl' # none, ddl, mod, all #log_replication_commands = off # 当使用的临时文件超过多大时,打印到日志中,跟踪大SQL。 log_temp_files = 256MB # log temporary files equal or larger # than the specified size in kilobytes; # -1 disables, 0 logs all temp files log_timezone = 'PRC' #------------------------------------------------------------------------------ # PROCESS TITLE #------------------------------------------------------------------------------ #cluster_name = '' # added to process titles if nonempty # (change requires restart) #update_process_title = on #------------------------------------------------------------------------------ # STATISTICS #------------------------------------------------------------------------------ # - Query and Index Statistics Collector - #track_activities = on #track_counts = on # 跟踪IO耗时会带来一定的性能影响,默认是关闭的 # 如果需要统计IO的时间开销,设置为ON # 建议用pg_test_timing测试一下获取时间的开销,如果开销很大,建议关闭这个时间跟踪。 track_io_timing = on # 是否需要跟踪函数被调用的次数,耗时 track_functions = pl # none, pl, all # 单条被跟踪的QUERY最多能存储多少字节,如果有超长SQL,则日志中被截断。 根据需要设置 #track_activity_query_size = 1024 # (change requires restart) # 相对路径($PGDATA)或绝对路径。用于存储统计信息的临时目录。可以设置为ram based directory,提高性能 # Pointing this at a RAM-based file system will decrease physical I/O requirements and can lead to improved performance. #stats_temp_directory = 'pg_stat_tmp' # - Monitoring - #log_parser_stats = off #log_planner_stats = off #log_executor_stats = off #log_statement_stats = off #------------------------------------------------------------------------------ # AUTOVACUUM #------------------------------------------------------------------------------ # 打开自动垃圾回收 autovacuum = on # Enable autovacuum subprocess? 'on' # requires track_counts to also be on. log_autovacuum_min_duration = 0 # -1 disables, 0 logs all actions and # their durations, > 0 logs only # actions running at least this number # of milliseconds. # CPU核多,并且IO好的情况下,可多点,但是注意最多可能消耗这么多内存: # autovacuum_max_workers * autovacuum mem(autovacuum_work_mem), # 会消耗较多内存,所以内存也要有基础。 # 当DELETE\UPDATE非常频繁时,建议设置多一点,防止膨胀严重 # autovacuum_max_workers 公式: max(min( 8 , CPU核数/2 ) , 5) autovacuum_max_workers = 8 # max number of autovacuum subprocesses # (change requires restart) # 建议不要太高频率,否则会因为vacuum产生较多的XLOG。或者在某些垃圾回收不掉的情况下(例如长事务、feed back on,等),导致一直触发vacuum,CPU和IO都会升高 #autovacuum_naptime = 1min # time between autovacuum runs #autovacuum_vacuum_threshold = 50 # min number of row updates before # vacuum #autovacuum_analyze_threshold = 50 # min number of row updates before # analyze autovacuum_vacuum_scale_factor = 0.02 # fraction of table size before vacuum autovacuum_analyze_scale_factor = 0.01 # fraction of table size before analyze # 除了设置较大的FREEZE值。 # 还是需要注意FREEZE风暴 [《PostgreSQL Freeze 风暴预测续 - 珍藏级SQL》](201804/20180411_01.md) # 表级定制freeze #[《PostgreSQL 10 CLogControlLock 等待事件分析与优化 - hint bit, freeze, autovacuum, 风暴》](201903/20190319_02.md) autovacuum_freeze_max_age = 1200000000 # maximum XID age before forced vacuum # (change requires restart) autovacuum_multixact_freeze_max_age = 1250000000 # maximum multixact age # before forced vacuum # (change requires restart) # 如果数据库UPDATE非常频繁,建议设置为0。并且建议使用SSD autovacuum_vacuum_cost_delay = 0ms # default vacuum cost delay for # autovacuum, in milliseconds; # -1 means use vacuum_cost_delay #autovacuum_vacuum_cost_limit = -1 # default vacuum cost limit for # autovacuum, -1 means use # vacuum_cost_limit #------------------------------------------------------------------------------ # CLIENT CONNECTION DEFAULTS #------------------------------------------------------------------------------ # - Statement Behavior - #search_path = '"$user", public' # schema names #row_security = on #default_tablespace = '' # a tablespace name, '' uses the default # 临时表的表空间,可以设置多个,轮询使用。 # 临时表的表空间,建议为SSD目录。速度快。 #temp_tablespaces = '' # a list of tablespace names, '' uses # only default tablespace #check_function_bodies = on #default_transaction_isolation = 'read committed' #default_transaction_read_only = off #default_transaction_deferrable = off #session_replication_role = 'origin' # 可以用来防止雪崩,但是不建议全局设置 #statement_timeout = 0 # in milliseconds, 0 is disabled # 执行DDL时,建议加上超时 # 可以用来防止雪崩 #lock_timeout = 0 # in milliseconds, 0 is disabled # 空闲中事务自动清理,根据业务实际情况设置 idle_in_transaction_session_timeout = '6h' # in milliseconds, 0 is disabled #vacuum_freeze_min_age = 50000000 vacuum_freeze_table_age = 200000000 #vacuum_multixact_freeze_min_age = 5000000 vacuum_multixact_freeze_table_age = 200000000 #vacuum_cleanup_index_scale_factor = 0.1 # fraction of total number of tuples # before index cleanup, 0 always performs # index cleanup #bytea_output = 'hex' # hex, escape #xmlbinary = 'base64' #xmloption = 'content' # 限制GIN扫描的返回结果集大小,在想限制超多匹配的返回时可以设置 #gin_fuzzy_search_limit = 0 # GIN索引pending list的大小 #gin_pending_list_limit = 4MB # - Locale and Formatting - datestyle = 'iso, mdy' #intervalstyle = 'postgres' timezone = 'PRC' #timezone_abbreviations = 'Default' # Select the set of available time zone # abbreviations. Currently, there are # Default # Australia (historical usage) # India # You can create your own file in # share/timezonesets/. # 浮点精度扩展值 #[《PostgreSQL 浮点精度调整(extra_float_digits)》](201510/20151020_01.md) #extra_float_digits = 0 # min -15, max 3 #client_encoding = sql_ascii # actually, defaults to database # encoding # These settings are initialized by initdb, but they can be changed. lc_messages = 'C' # locale for system error message # strings lc_monetary = 'C' # locale for monetary formatting lc_numeric = 'C' # locale for number formatting lc_time = 'C' # locale for time formatting # default configuration for text search default_text_search_config = 'pg_catalog.english' # - Shared Library Preloading - # 需要加载什么LIB,预先加载,对于经常访问的库也建议预加载,例如postgis #shared_preload_libraries = 'pg_jieba,pipelinedb' # (change requires restart) shared_preload_libraries = 'pg_stat_statements' #local_preload_libraries = '' #session_preload_libraries = '' # - Other Defaults - #dynamic_library_path = '$libdir' # 是否已编译JIT特性,如果已编译,设置为ON #jit = off # allow JIT compilation #jit_provider = 'llvmjit' # JIT implementation to use #------------------------------------------------------------------------------ # LOCK MANAGEMENT #------------------------------------------------------------------------------ deadlock_timeout = 1s #max_locks_per_transaction = 64 # min 10 # (change requires restart) #max_pred_locks_per_transaction = 64 # min 10 # (change requires restart) #max_pred_locks_per_relation = -2 # negative values mean # (max_pred_locks_per_transaction # / -max_pred_locks_per_relation) - 1 #max_pred_locks_per_page = 2 # min 0

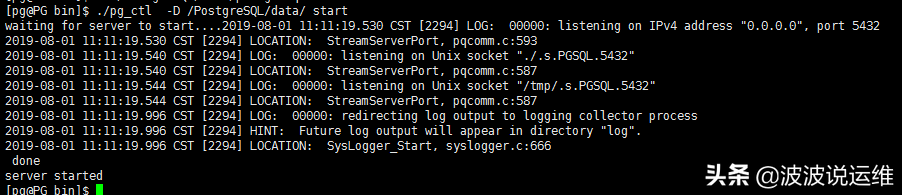

设置后重启生效

以上参数简单分类如下:

1、固定参数

listen_addresses='*' superuser_reserved_connections=100 tcp_keepalives_idle=45 tcp_keepalives_interval=10 tcp_keepalives_count=10 vacuum_cost_delay=0 vacuum_cost_limit=10000 bgwriter_delay=10ms bgwriter_lru_maxpages=1000 bgwriter_lru_multiplier=10.0 effective_io_concurrency=0 max_worker_processes=256 old_snapshot_threshold = -1 wal_level = replica wal_compression = on wal_buffers=16MB wal_writer_delay=10ms checkpoint_timeout = 25min checkpoint_completion_target = 0.4 max_wal_senders = 64 max_replication_slots = 56 max_logical_replication_workers = 56 hot_standby = on max_standby_archive_delay = 300s max_standby_streaming_delay = 300s wal_receiver_status_interval = 1s hot_standby_feedback = off wal_receiver_timeout = 30s random_page_cost=1.1 logging_collector=on log_truncate_on_rotation=on log_min_duration_statement=5s log_checkpoints=on log_lock_waits=on log_statement='ddl' log_temp_files=128MB track_io_timing=on track_functions=pl autovacuum = on log_autovacuum_min_duration=0 autovacuum_analyze_scale_factor = 0.05 autovacuum_freeze_max_age = 1200000000 autovacuum_multixact_freeze_max_age = 1400000000 autovacuum_vacuum_cost_delay=0 statement_timeout = 0 lock_timeout = 0 idle_in_transaction_session_timeout = '1h' vacuum_freeze_table_age = 200000000 vacuum_multixact_freeze_table_age = 200000000 deadlock_timeout = 1s auth_delay.milliseconds=3s enable_partitionwise_join = on enable_partitionwise_aggregate = on pg_stat_statements.max=5000 pg_stat_statements.save=off pg_stat_statements.track=top pg_stat_statements.track_utility=off track_activity_query_size = 1024 unix_socket_directories = '.' unix_socket_permissions = 0700 jit = off # allow JIT compilation jit_provider = 'llvmjit' # JIT implementation to use log_timezone='UTC' huge_pages=trylog_error_verbosity=verbose log_rotation_age=1h log_rotation_size = 100MB log_filename = 'postgresql-%H.log' autovacuum_vacuum_scale_factor = 0.02 # 0.005~ 0.15 log_destination = 'csvlog' data_sync_retry = off full_page_writes = on

2、环境参数

log_timezone = 'PRC' datestyle = 'iso, mdy' timezone = 'PRC' lc_messages = 'C' lc_monetary = 'C' lc_numeric = 'C' lc_time = 'C'

3、动态参数

port = 5432 # 监听端口 max_connections= # 规格内存(GB)*1000*(1/4)/10 + superuser_reserved_connections shared_buffers= # IF use hugepage: 规格内存*(1/4) ELSE: min(32GB, 规格内存*(1/4)) max_prepared_transactions # max_prepared_transactions=max_connections work_mem # max(min(规格内存/4096, 64MB), 4MB) maintenance_work_mem # min( 8G, (主机内存*1/8)/max_parallel_maintenance_workers ) autovacuum_work_mem # min( 8G, (规格内存*1/8)/autovacuum_max_workers ) max_parallel_maintenance_workers # min( max(2, CPU核数/2) , 16 ) max_parallel_workers_per_gather # min( max(2, CPU核数-4) , 24 ) max_parallel_workers # min(max(2, CPU核数-4) ,32) max_wal_size # min(shared_buffers*2, 用户存储空间/10) min_wal_size # min(shared_buffers/2 , 用户存储空间/10) max_sync_workers_per_subscription # min ( 32 , max(2, CPU核数-4) ) effective_cache_size # 规格内存*0.75 autovacuum_max_workers # max(min( 8 , CPU核数/2 ) , 5) synchronous_commit = off # 当高并发写事务遇到了WAL瓶颈时,优先考虑提高磁盘IOPS能力,如果需要立即提升性能可以使用异步提交,或开启分组提交##根据实际情况开启归档archive_mode = on archive_timeout = '5min' #archive_command='test ! -f /disk1/digoal/arch/%f && cp %p /disk1/digoal/arch/%f'# 也可以先设置为如下,规划好归档目录后再设置。# archive_command = '/bin/date' # 开启归档,但是不COPY归档文件, ##根据实际情况设置预加载的SO库shared_preload_libraries='pg_stat_statements,auth_delay,auto_explain' ##根据实际情况,设置SSL链路#ssl=on #ssl_cert_file = 'server.crt' #ssl_key_file = 'server.key'

4、建议只允许用户修改如下配置,默认值以及允许用户修改的范围:

enable_partitionwise_join = on # on, off enable_partitionwise_aggregate = on # on, off jit = off # on, off temp_file_limit= 规格内存大小 # -1, 16MB ~ 1024000MB wal_level=replica # replica , logical wal_keep_segments=0 # 0 ~ 1024000 track_commit_timestamp=off # off, on vacuum_defer_cleanup_age=0 # 0 ~ 5000000 log_min_duration_statement=5s # -1 , 1s ~ 600s log_connections=off # on, off log_disconnections=off # on, off log_duration=off # on, off log_statement='ddl' # ddl, mod, all log_temp_files=128MB # 0, -1, 16MB ~ 1024MB default_transaction_deferrable=off # on, off statement_timeout = 0 # 0, 3s ~ 3600s lock_timeout = 0 # 0, 3s ~ 600s idle_in_transaction_session_timeout = '1h' # 0, 3s ~ 36000s extra_float_digits = 0 # -15~3, int old_snapshot_threshold=-1 # -1, 1min ~ 2880min autovacuum_vacuum_cost_delay = 0 # 0~50 autovacuum_vacuum_cost_limit = 10000 # 5000 ~ 10000 shared_buffers=min(32GB, 规格内存*(1/4)) # min(32GB, 规格内存*(1/4)) ,规格内存*(1/4) autovacuum_vacuum_scale_factor = 0.02 # 0.005~ 0.15

如果加了LOG审计的采样插件,再加上对应参数,允许用户修改,以及允许用户可以修改的范围。

以上参数如果是生产环境建议慎重执行,一定要理解清里面的意思,然后再放上去。

后面会分享更多devops和DBA方面的内容,感兴趣的朋友可以关注下~

如果你觉得这篇文章对你有帮助, 请小小打赏下.